Why smart factories cannot exist without secure industrial control systems

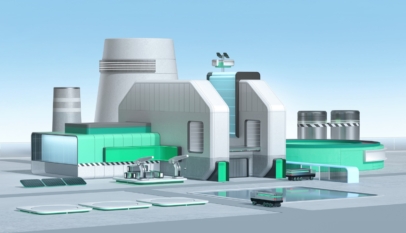

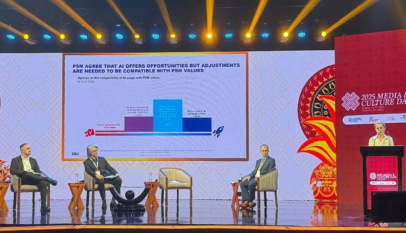

Artificial Intelligence is rapidly entering heavy industry. From power generation to fertilizers, oil refining to manufacturing, companies are deploying AI to optimize energy usage, predict failures, stabilize processes and reduce emissions.

The promise is enormous: self-adjusting plants, autonomous optimization and near-perfect efficiency.

But there is a problem rarely discussed outside engineering circles.

AI does not just observe industrial processes.

It influences them.

And the moment algorithms begin influencing valves, motors, temperatures and pressures, cybersecurity stops being an IT issue and becomes an operational safety requirement.

In reality, AI-driven industry cannot exist without secure industrial control systems and networks.

Industrial AI Is Not Like Office AI

In offices, AI recommendations may affect reports or decisions.

In industry, AI decisions affect physics.

An AI optimizer adjusting a compressor load changes mechanical stress.

A predictive maintenance model delaying shutdown affects equipment life.

An energy optimization model altering combustion impacts safety margins.

These actions are executed through industrial control systems — the specialized computers that directly operate equipment inside plants.

If those systems are manipulated, AI becomes a risk multiplier instead of an efficiency tool.

Connectivity Created the Opportunity — and the Exposure

Industrial facilities historically relied on isolation. Control systems were separated from corporate networks and external access was minimal.

Modern digital transformation changed that.

Today industrial networks connect to:

- Cloud analytics platforms

- Remote monitoring centers

- Vendor maintenance systems

- Centralized operational dashboards

- Enterprise AI optimization engines

This connectivity allows AI to function.

But it also allows unauthorized influence over the same process.

Without cybersecurity, AI does not just optimize operations — it amplifies vulnerabilities.

When AI Trusts Compromised Data

AI models depend entirely on the integrity of their inputs.

If an attacker manipulates sensor values or control feedback:

The model still works perfectly.

It just optimizes the wrong reality.

The result may not be an immediate shutdown.

Instead, the plant may unknowingly operate in harmful conditions:

Gradual equipment degradation

Hidden efficiency losses

Unsafe operating margins

Product quality deviation

Operators may believe the system is improving performance while it is quietly being steered away from safe operation.

This is why data integrity — not just availability — becomes the most critical cybersecurity objective in AI-driven facilities.

The Shift from Protecting Computers to Protecting Decisions

Traditional industrial cybersecurity focused on preventing unauthorized access.

AI changes the question from:

“Can someone log into the system?”

to

“Can someone influence what the system decides?”

An attacker no longer needs to directly start or stop a machine.

Altering the inputs guiding automated decisions can achieve the same outcome — more slowly and more convincingly.

This makes cybersecurity part of operational reliability, not just information protection.

Why Weak Foundations Make AI Dangerous

Organizations often deploy AI pilots faster than they modernize their control system security architecture.

Common weaknesses still found in many facilities:

- Shared engineering workstations

- Flat industrial networks

- Uncontrolled remote vendor access

- Limited monitoring of configuration changes

- Trust in internal traffic without verification

AI connected on top of these environments does not create intelligence.

It creates automated risk.

Instead of operators making occasional mistakes, the plant can now execute incorrect actions continuously and confidently.

Cybersecurity as the Enabler of Industrial AI

Properly secured systems change the picture entirely.

When industrial networks implement:

- Segmented architectures

- Continuous monitoring

- Verified command paths

- Strong change management

- Asset ownership and accountability

AI gains reliable ground truth.

In that environment, AI becomes what industry expects:

a stability and safety enhancer rather than a vulnerability amplifier.

Cybersecurity is therefore not a barrier to innovation — it is the foundation that allows automation to be trusted.

The Coming Reality

Industry is moving toward autonomous operations.

But autonomy requires trust.

Trust requires integrity.

Integrity requires cybersecurity.

The question is no longer whether facilities will adopt AI.

The real question is whether they will secure their industrial control systems before allowing algorithms to influence physical processes.

Because in industrial environments, incorrect decisions do not crash software.

They move steel, release pressure, spin turbines and open valves.

And intelligent systems can only be safe when the systems guiding them are secure.

About the Author

Asad Naeem is an industrial automation and OT cybersecurity professional with years of experience in securing and modernizing process industry control systems. He works at the intersection of automation, functional safety, and industrial cybersecurity, focusing on building resilient, reliable, and secure operations